Bitcoin price prediction based on time series predictive AI Model architecture (neural networks)

I want to say thank you to these guys: Jeremy Howard (fast.ai), Jason Brownlee (machinelearningmastery), and Lex Fridman. Most of what I know about machine learning & neural networks is from these guys.

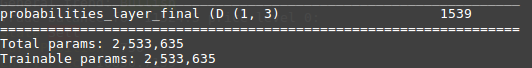

First to apply machine learning on anything you have to figure out what your machine learning objective is. Let's start with this, I have 100 thousand rows of data points collected every 5 minutes. My objective is to know how those data points from now affect the price of Bitcoin in the future, let's say in 12 hours. There are two ways, one way I could use linear regression and teach a model that given the chunk of dataset X the future price will be Y (ex $12,500) and the model would try to learn this regression and next time I give a chunk of my dataset X it should spit out what price it thinks this chunk of data represents the best. Another way is to do a binary classification. This way I don’t have unlimited output points, the price could go from 0 to infinity. I only have 3 outputs (down, stay, up) defined this way: price goes down more than my specified margin percentage, the price will stay in a given percentage range, the price will go up more than a specified margin percentage. In the end I chose to go with binary classification by getting much more reliable results.

I also had to choose the hidden layers structure of the model. I didn’t even try only the dense layers approach, which would be taking the last row of the dataset and trying to fit the model this way “last row data represents future price”. I am quite certain that price could ever be predicted only by trying to find the patterns on how those data points change over time and looking for the same patterns in the future. All this came from trying to learn the theory of classical technical analysis. People use price history, the shape of candles, volume spikes which happened some time ago and so on, drawing all kinds of shape formations, triangles, bull flags, bear flags, head, and shoulders and other patterns in order to make the guess what is the most likely price scenario to be seen next in the future.

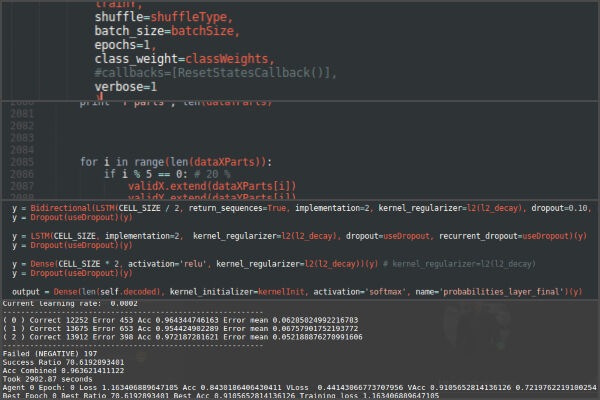

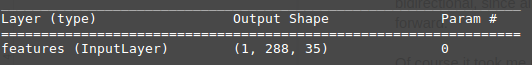

I chose to go with Long-Short-Term memory layers “LSTMs”. LSTM has feedback connections. It can not only process single data points (such as images), but also entire sequences of data (such as speech or video). I thought this is what I need for my price prediction problem. The problem with LSTM is that it is like using FOR cycle in any programming language, the longer the sequence is, the longer it takes to process it. It has to go through multiplication matrices and activation functions for each row in given input and on training part (backpropagation) it gets even worse training time doubles with additional sequence elements. So I had no choice but to limit the size of the sequence I’ll feed my network with. I’ve decided to split my dataset into daily chunks that would be 288 rows in one input item. So every time 5 minutes go past and the newest data points arrive I just take the last 288 rows (24h from now backwards) and feed those 288 rows to get price prediction 12 hours ahead from now.

Still, the sequence of 288 elements took too long to train which I could work with. Looking into possible solutions I’ve found this interesting IMDB CNN LSTM model example from Keras. You can find it here - https://github.com/keras-team/keras/blob/master/examples/imdb_cnn_lstm.py

It uses 1D convolution layers at the beginning of the model and I was quite aware at the moment of how convolutional neural networks work since all my previous experience was with vision models. The main idea was to take the sequence which are data points collected every 5 minutes, make convolutions with a kernel size of 6 elements, step size 1 that would represent 30 minutes inside one convolution (making our sequence to 288 steps of 30 minutes convolutions), then use the max-pooling layer to select strongest features from 6 convolutions, making 6 convolutions into 1 and eureka we have shortened the sequence from 288 elements to 48. Now each sequence element represents 30 minutes instead of 5. And LSTM can quite fastly go through 48 sequence elements compared to 288 I had before.

Finally, I chose to go with a double LSTM layer instead of a vanilla approach, so my model could find deeper patterns of representations in the data. And the first layer of LSTM I chose to be bidirectional since all kinds of patterns in sequence might go backwards not necessarily forward.

Of course, it took me months of different model configurations, different regularization attempts, different features selection to end up with this.

I ended up with 2.5M trainable parameters, quite a lot but compared to image models not that much. VGG16 would have 60M trainable params for example.

Posted 5 years ago by Darius

Add a comment